Regression Wisdom

Regression issues

- Model fit measures

- Extrapolation

- Outliers

- Leverage

- Influence

- Interpreting a regression

Model fit measures

Regression results

Many parts of these results we already know how to interpret. For now, we will focus on model fit measures.

- \(R^2\)

- \(s_e\)

- Residuals 5 number summary

Residuals - histogram

How would you interpret this residual histogram?

Correlation review

- Recall that a correlation, \(r\), ranges from -1 to 1 and indicates the strength of the association between two variables

- If \(r\) is -1 or 1 exactly, there is no variation, the correlation indicate the relationship is a straight line

- Note that \(r\) does not indicate the slope

- If \(r\) is 0, that means there is no relationship

- What is the slope in that case?

- \(\hat{y} = b_0 + b_1*x\)

R squared definition

\(R^2\) is the square of \(r\) in a two variable case, so between 0 and 1

But, unlike \(r\), \(R^2\) is meaningful in multivariate models

Percent of the total variation in the data explained by the model

Sum of the errors from our model divided by sum of errors from the ‘braindead’ model of \(\hat{y}=\bar{y}\)

If the \(R^2\) is small, that means our model doesn’t beat the ‘braindead’ model by very much

When is \(R^2\) “big enough”?

\(R^2\) is useful, but only so much so

The closer \(R^2\) is to 1, the more useful the model

- How close is “close”?

- Depends on the situation

- \(R^2\) of 0.5 might be very bad for a model that uses height to predict weight

- Should be more closely related

- \(R^2\) of 0.5 might be very good for a model using test scores to predict future income

- Response variable has a lot of factors that shape it and a lot of noise

Good practice: always report \(R^2\) and \(s_e\) and let readers analyze the results as well

Extrapolating

The farther a new value is from the range of \(x\), the less trust we should place in the predicted value of \(y\)

Venture into new \(x\) territory, called extrapolation

Dubious: questionable assumption that nothing changes about the relationship between x and y changes for extreme values of \(x\)

Predicting MPG of cars

1970s data on automobiles

Predicting the Maybach

Predicting the Maybach

Predicting the Maybach

Will our model do a good job predicting this car’s miles per gallon?

Can we predict this car’s MPG using our model?

Weight: 6581 pounds

Model: \(\hat{y} = b_0 + b_1*x\)

\(\hat{y} = 46.3 + -0.00767*6581\)

\(\hat{y} = -4.17\) miles per gallon

- Nonsense prediction

Be wary of out of sample predictions

Outliers

Height and net worth

First, we can create random data for both a variable called height and one called log net worth but in the below example they are defined to be random and have no relation to each other.

Adding Steve Ballmer

What will happen to the slope and \(R^2\)?

Steve Ballmer plot

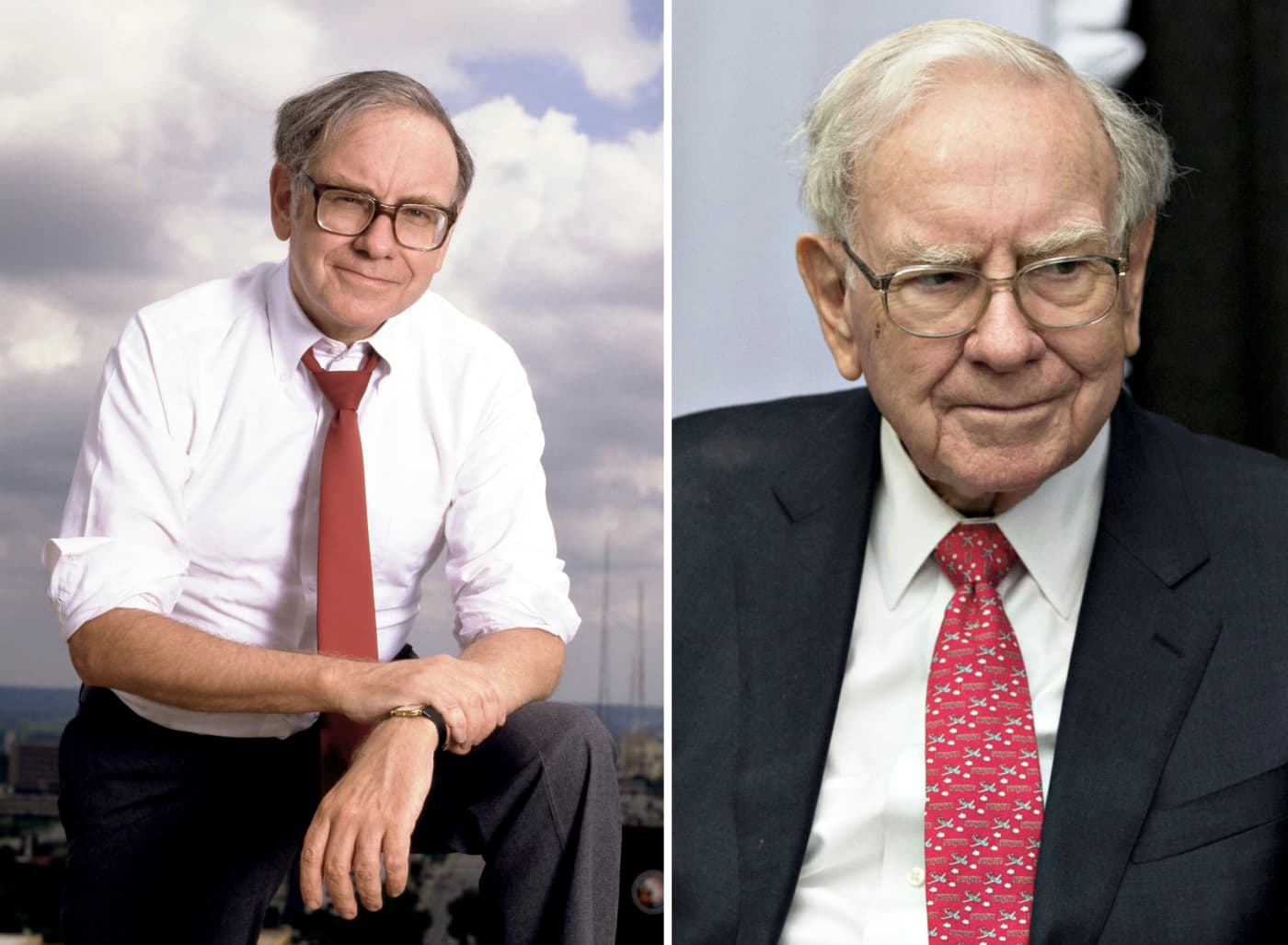

Adding Warren Buffet

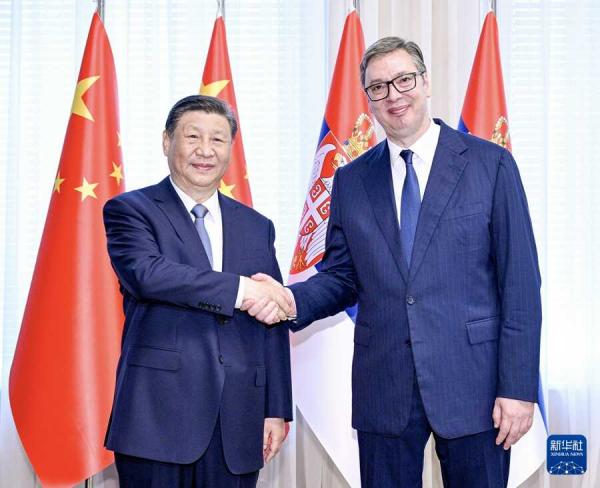

Adding Aleksandar Vučić

Leverage vs. influence

A data point whose \(x\) value is far from the mean of the rest of the \(x\) values is said to have high leverage.

Leverage points have the potential to strongly pull on the regression line.

A point is influential if omitting it from the analysis changes the model enough to make a meaningful difference.

Influence is determined by

- The residual

- The leverage

Warnings

Influential points can hide in plots of residuals.

Points with high leverage pull line close to them, so have small residuals.

See points in scatterplot of original data.

Find regression model with and without the points.

Interpreting a regression

Step 1: develop some expectations

Horsepower vs. MPG

More powerful engines probably are less fuel efficient

Relationship is likely roughly linear

The exact relationship depends on the efficiency of the engine

- Could be noisy

Step 2: make a picture

Step 3: check the conditions

Quantitative variable condition

Straight enough condition

Outlier condition

Does the plot thicken

Conclusion:?

Step 4: identify the units

- Miles per gallon: amount of miles you can travel on one gallon of gas, a measure of efficiency.

- Most gasoline-using cars have MPG between 10-40, higher being better

- Horsepower: power of the engine.

- Typical values for standard cars are in the 100-200 range, higher meaning more powerful

Step 5: intepret the slope of the regression line

- For every one unit increase in horsepower, miles per gallon decreases by about -0.15 units

- Is that a lot or a little?

Step 6: determine reasonable values for the predictor variable

Step 7: interpret the intercept

Step 8: solve for reasonable predictor values

Horsepower = Q1 = 75:

\(\hat{y} = b_0 + b_1*x\)

\(\hat{y} = 39.94 + -0.158*100\)

\(\hat{y} = 28.09\)

Horsepower = Median = 93.5:

\(\hat{y} = b_0 + b_1*x\)

\(\hat{y} = 39.94 + -0.158*100\)

\(\hat{y} = 25.17\)

Horsepower = Q3 = 126:

\(\hat{y} = b_0 + b_1*x\)

\(\hat{y} = 39.94 + -0.158*100\)

\(\hat{y} = 20.03\)

Step 9: interpret the residuals and identify their units

Step 10: view the distribution of the residuals

Step 11: interpret the residual standard error

Step 12: interpret the R squared

Step 13: think about confounders

- What are some confounders, or “lurking variables”?

- Categorical

- Quantitative